One of the main promises of the IIoT (Industrial Internet of Things) is advanced data analytics. As I research what exactly this means and look for use cases, one thing that comes up often is anomaly detection. At first glance, the question that comes to my mind is, "But wait, don’t we already have this?"

When I say "we," like many of you, I’m coming from an industrial automation background—the OT (Operations Technology) side, as it is sometimes referred to today. This is as opposed to the IT (Information Technology) side of things, where a lot of today’s analytics tools are rooted.

So back to my question. For as long as we’ve had HMI and SCADA systems, we’ve also had the ability to set up events or alarms for when a variable or analog I/O point is out of normal range. So isn’t an anomaly just a value that is out of range?

As I dug in a bit, I realized there is a lot more to anomaly detection than that. I also realized that unless you are a data scientist, statistician, or mathematician, it can get pretty heavy pretty fast. So I wanted to share the basics of what I learned in layman’s terms.

As IIoT applications start to proliferate and the OT and IT worlds merge (or collide?), I think it is important to understand the anomaly detection aspect of analytics and what it can do to enhance traditional industrial process alarming.

So what is anomaly detection?

Anomaly detection is used to identify unusual patterns in data that do not conform to expected behavior. It's a branch of Machine Learning, which is a type of Artificial Intelligence (AI). At this point, most anomaly detection use cases are found in areas such as network system security, fraud detection (banking), and medical applications.

Anomalies generally fall into one of three categories:

- Point anomalies—A single point of data that is too far off from the rest.

- Contextual anomalies—An instance of data that’s abnormal in a specific context (like the time of day), but not otherwise.

- Collective anomalies—A collection of data instances that are abnormal in relation to the entire data set.

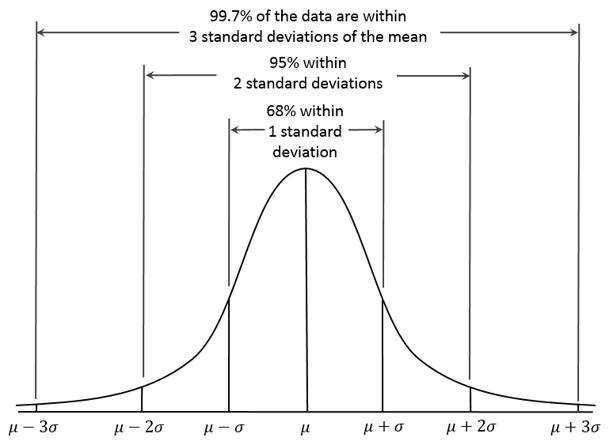

You are probably familiar with a Gaussian distribution or standard deviation that is typical for data. The graphic below shows it:

This model is very simple—and it does not accurately represent many types of data.

For example, think of data that could be multimodal, or seasonal. Seasonal (not a reference to seasons of the year) means there are varying states of normal. An example in process control could be a machine or system that has the four "normal" states of running, shutdown, maintenance mode, and startup.

The three main types of anomaly detection are supervised, unsupervised, and hybrid (or semi-supervised).

Supervised anomaly detection is when we have training data. Data is labeled as either normal or abnormal. To train the data, one could start with a set of abnormal points and add normal data. There are other more sophisticated methods as well to develop training classifiers.

Supervised anomaly detection can present challenges, such as cases where abnormal is not well defined, the definition of normal and abnormal frequently change (seasonal), or perhaps the data contains noise that may be similar to abnormal behavior.

When no training data is available, unsupervised anomaly detection techniques can be used to find anomalies in unlabeled data. This type of anomaly detection holds promise for machine learning that could yield benefits in industrial or operational technology applications.

Some of the popular techniques for unsupervised anomaly detection are density, clustering, and support vector machine (SVM). Many algorithms are patented, but there are established academic standards in practical use.

Anomaly detection vs. events & alarms

So back to our question. When comparing anomaly detection to traditional HMI or SCADA threshold events, one difference is the ability to learn without being explicitly programmed.

Another important point is that even though configuring events & alarms in an HMI system can be very sophisticated (especially when used in conjunction with the programming environment of the hardware controller), we are typically dealing with each metric individually.

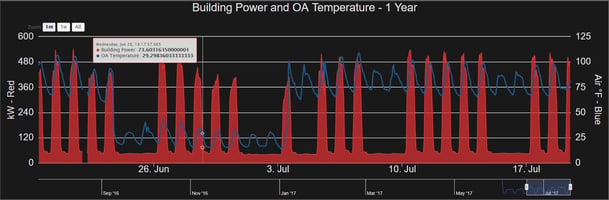

Viewed individually, single metrics may not reveal much. But together, they can tell a more complete story, as different patterns can coexist and may be intertwined.

In anomaly detection this is called multivariate, where many signal inputs are taken in as one dataset. There are some challenges with this, such as scale, as well as difficulties when the input signals are not all homogenous in behavior. That said, many anomaly algorithms use both univariate and multivariate, to take advantage of each method.

Also, trying to code seasonal patterns into a normal setpoint type of alarming can become complex and result in false positives and false negatives. Aside from understood seasonal patterns, process and machine applications may also experience "new normals," where conditions change over the life of the machine or process. Anomaly detection may offer a more adaptive approach, or complement traditional HMI or controller alarming.

Anomaly detection tools

So what commercial off-the-shelf (COTS) tools are out there in the marketplace for anomaly detection?

Many of the large IT companies have IoT platforms with data analytics that include anomaly detection. Examples are IBM Watson IoT, Amazon Web Services (AWS), and Microsoft Azure. (Our video shows how to get real-world data into IBM Watson.)

Additionally, many boutique companies specializing in data analytics include anomaly detection for industrial, process, or machine data. One example is Sayantek, who do anomaly detection and other analytics at the edge (meaning on the same network as the machine or process, rather than in the cloud).

This brings up the question of whether anomaly detection should be done at the edge or in the cloud. A lot has been written about this topic, and the consensus seems to be that due to the volume of data in many IoT applications, some preprocessing should indeed be done before moving data to the cloud. This concept is supported by efforts like the Linux Foundation’s EdgeX Foundry (initially started by Dell).

So anomaly detection likely has a place alongside our traditional HMI and SCADA setpoint thresholds at manufacturing and process companies. Anomalies may not just point out issues, but actually provide opportunities to improve our operations.