The holy grail of IIoT applications is the ability to basically predict the future.

The holy grail of IIoT applications is the ability to basically predict the future.

We want to know when something, either a system or a component in our process is going to fail—before it actually does.

That way we can dispatch replacement parts and service technicians when and where we need them, before a failure actually occurs.

Maximizing uptime, improving our overall equipment effectiveness (OEE), and generally making our businesses more efficient and competitive are some of the many possible outcomes of implementing predictive analytics.

But there’s a massive gap between the promised outcomes of predictive analytics and the path required to actually build a real-world application that might help us tune our OEE in real time from a mobile device.

Predictive analytics is not an easy discipline to get one's head around, much less implement. It’s a topic that’s resulted from the distillation of many other sciences and technologies that include buzzwords we’re all familiar with, like big data, machine learning, and cognitive computing.

Predictive analytics is not an easy discipline to get one's head around, much less implement. It’s a topic that’s resulted from the distillation of many other sciences and technologies that include buzzwords we’re all familiar with, like big data, machine learning, and cognitive computing.

But if we try to distill the topic down into smaller, more easily understood topics, we may begin to see the forest through the trees and start to understand the building blocks needed to add predictive analytics to our current industrial applications.

And the first building block we’re going to focus on is data.

We hear about big data all the time. But what exactly is it, where does it come from, and how is it used for predictive analytics in industrial automation and process control applications?

If you think about a typical industrial application of today, we’re already collecting and logging a lot of data. We log almost everything related to our process inputs and outputs as well as our control system variables.

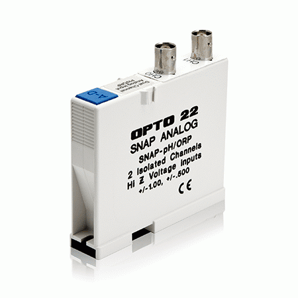

Take a typical water or wastewater treatment application. We may be logging all kinds of data from the sensors attached to our control system—dissolved oxygen, pH, ORP, turbidity, and many other data points.

In semiconductor manufacturing, we may be logging data on things like yield levels and solder temperatures. Or in a building automation application we might be logging peak load levels when our chillers all switch on at the same time.

All of this control system data is the big data our IIoT applications need to consume to begin performing predictive maintenance. Cloud-based applications need to tap into our control systems as a data source. These systems consume many data formats, but a very common format in industrial applications is time series data.

All of this control system data is the big data our IIoT applications need to consume to begin performing predictive maintenance. Cloud-based applications need to tap into our control systems as a data source. These systems consume many data formats, but a very common format in industrial applications is time series data.

Time series data is a set of data points with associated timestamps. Basically it's a recording of a variable or data point over time.

Now, if we think about some of our applications and how often we record the data we're interested in—for example, solder temperature every second over an eight-hour production run—we can easily see how time series data can become what we refer to as big data.

Once we've collected time series data from our processes, we can begin to analyze the data and extract meaningful statistics from the data.

For example, we may see that for a given solder temperature during our process, our yield level decreases by some percentage. Time series data helps expose these correlations.

But to do really interesting stuff with data, we need access to a lot of it. For example, maybe we want to mash up our yield level, solder temperature, and the manufacturer's recommended procedures for the type of solder we're using.

As we add more and more data to our predictive analytics applications, moving that data into something designed to cope with that volume of information becomes a necessity.

There are specific software tools for mining and analyzing big data. We'll cover those in a followup post.